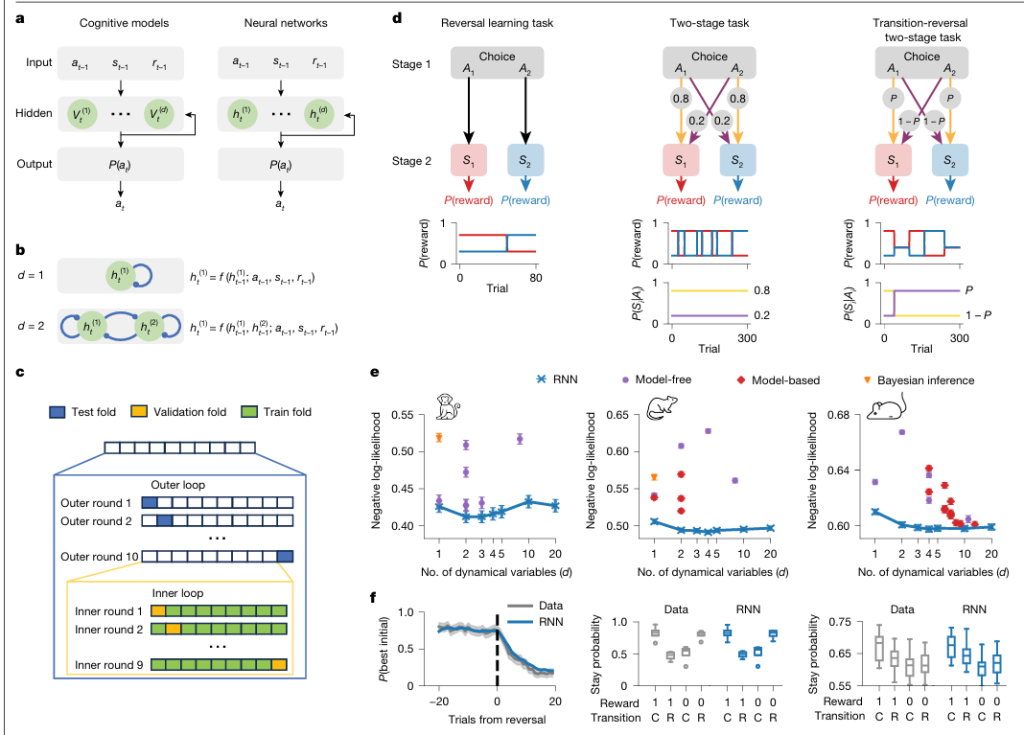

A recent breakthrough in computational neuroscience introduces a novel framework that uses tiny recurrent neural networks (RNNs) to model the cognitive processes behind biological decision-making. The study challenges traditional approaches like Bayesian inference and reinforcement learning (RL) by offering a more flexible yet interpretable alternative.

Instead of relying on complex, high-dimensional models, this work demonstrates how RNNs with as few as 1 to 4 units can outperform classical cognitive models, offering both predictive power and transparency in understanding behavior.

Why Tiny RNNs?

Traditional cognitive models, though elegant, often impose rigid assumptions that fail to fully capture the nuances of real-world behavior. Large neural networks, on the other hand, are powerful but hard to interpret. Tiny RNNs strike the perfect balance—they’re flexible enough to learn from data and simple enough to interpret, even at the individual level.

Each tiny RNN is trained to mimic an individual subject’s decision-making patterns in various reward-learning tasks, making them ideal for uncovering the underlying cognitive mechanisms.

Experimental Design

The study evaluates behavior across six reward-learning tasks using data from both animals and humans:

Animal Tasks

Reversal Learning

Subjects adapt to shifting reward probabilities.Macaques: 15,500 trials

Mice: 67,009 trials

Two-Stage Task

Differentiates model-based and model-free learning.Rats: 33,957 trials

Mice: 133,974 trials

Transition-Reversal Two-Stage Task

Adds unpredictability by reversing action-to-state transitions.Mice: 230,237 trials

Human Tasks

Three-Armed Reversal Learning – 1,010 participants

Four-Armed Drifting Bandit – 975 participants

Original Two-Stage Task – 1,961 participants

Model Architecture and Training

All models receive the same input structure (previous action, state, and reward) and output an action probability. Here’s how the approaches differ:

Tiny RNNs:

Built with Gated Recurrent Units (GRUs), using just 1–4 units for animal models and up to 20 for complex human behavior.

Special variants like switching GRUs improve performance with fewer parameters.Training Strategy:

Models are trained via maximum likelihood estimation with 10-fold nested cross-validation and early stopping.

For small human datasets, a knowledge distillation strategy is used:A large “teacher” RNN is trained on multi-subject data.

Tiny “student” RNNs learn individual behavior from the teacher’s policy.

Key Results

Superior Performance

Tiny RNNs consistently outperform all classical models across tasks and species.2–4 units sufficed for animal tasks.

Even 2-unit RNNs beat classical models on human data.

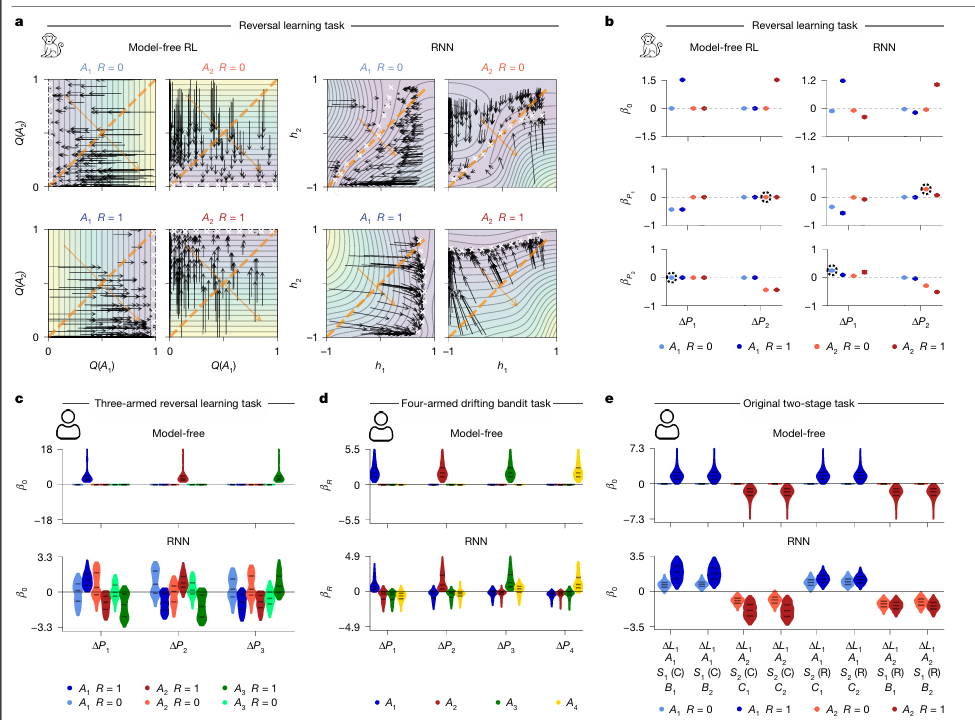

Interpretability through Dynamical Systems

The study uses tools like phase portraits and vector fields to visualize internal RNN dynamics.

It identifies behavioral phenomena missed by traditional models:Non-constant learning rates

Reward-induced indifference

State-dependent perseveration

Cognitive Discovery

Tiny RNNs uncovered novel mechanisms such as:“Drift-to-the-other” forgetting

Action value redistribution

Reward-modulated decision uncertainty

Low Behavioral Dimensionality

Optimal models only needed 1–4 latent variables, showing that even complex behavior may arise from low-dimensional cognitive processes.Scalable and Generalizable

The framework can be extended to larger populations, task-optimized agents (via meta-RL), and even clinical applications like computational psychiatry.

Implications

This work provides a powerful and generalizable framework for studying cognition. By modeling behavior as a low-dimensional dynamical system, it bridges the gap between data-driven machine learning and interpretable neuroscience.

Enables individual-level modeling with small datasets

Reveals new cognitive patterns overlooked by classical methods

Offers a basis for understanding both healthy and dysfunctional decision-making

Limitations and Future Work

Current models are based on GRU architecture, which may limit dynamics for more complex tasks.

Future directions include:

Exploring disentangled RNNs or transformers

Modeling naturalistic behavior

Linking behavior to neural activity for full cognitive modeling

Data & Code

All datasets and source code are open access, allowing for reproducibility and further research.

You can also read the complete article here.